The era of Big Data and Automated Algorithms

The last few decades have been a flourishing period for technology. Thanks to the increasing amounts of data regarding a variety of fields and the growing computational power, actors from both public and private sectors have been facing an exciting opportunity to capture human behavior. This unprecedented availability is offering the opportunity to help humans in several tasks and to improve services in such different ways: for instance, to optimize business value chains, to provide personalized service, or to automate decisions.

Big-data and automated algorithms represent a good call to implement some kind of technologies that actively help humans in making better decisions. The majority of these technologies are based on Machine Learning and Artificial Intelligence algorithms. ML and AI are defined as the study of methods for programming computers to learn. Computers are applied to a wide range of tasks, and for most of these it is relatively easy for programmers to design and implement the necessary software.

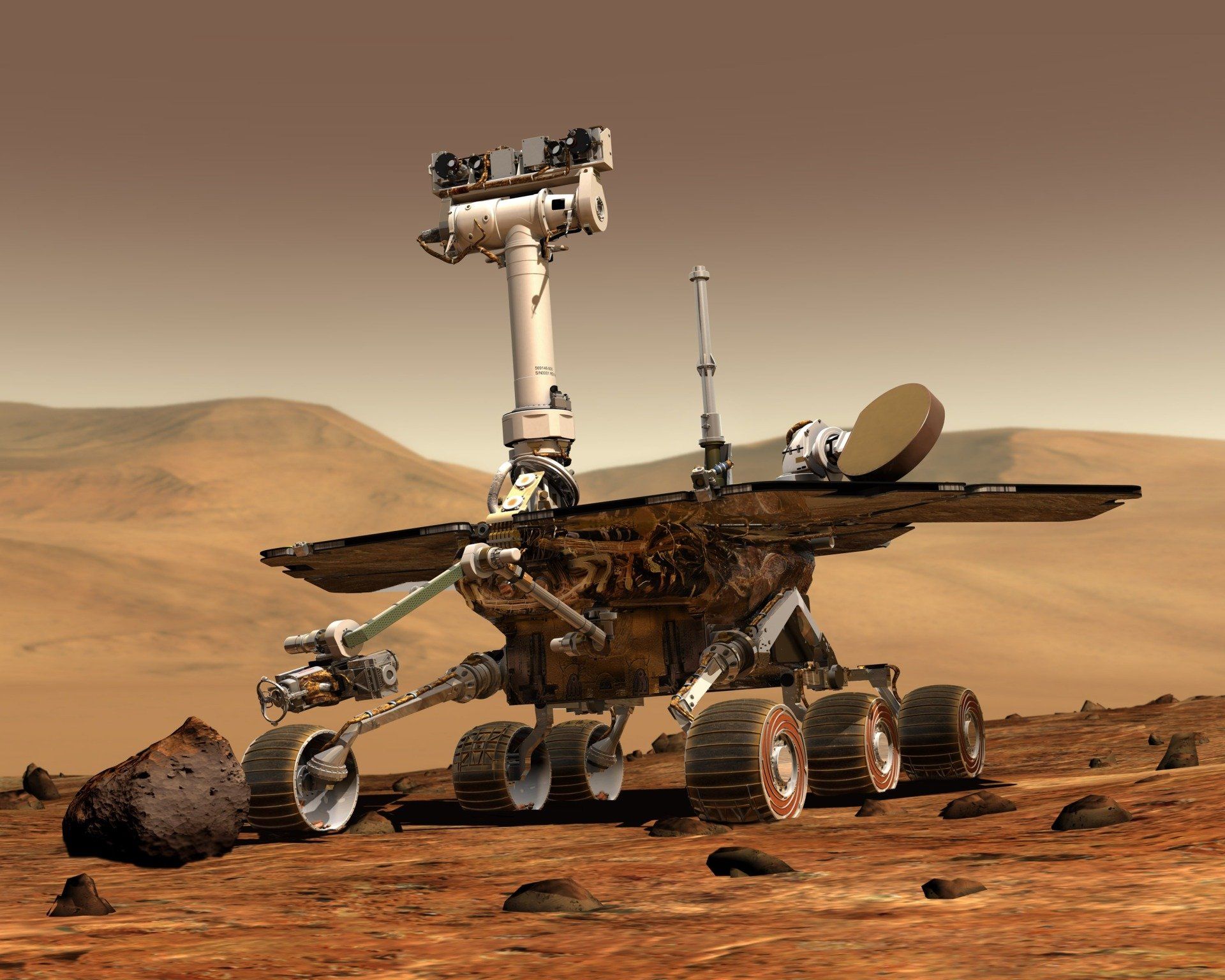

The benefits that machine learning and artificial intelligence have brought are undeniable. On the one hand, they have allowed the design of applications that can explore every part of the world that humans cannot visit. For example, in the field of space exploration, artificial intelligence’s machines explore parts of the universe that are totally hostile to human beings. Intelligent robots are programmed to excavate mining of fuel. On the other hand, they have been employed in several fields with the purpose of helping humans decision-making, i.e., supporting decision-based medical tasks.

However, in recent years several researchers, programmers, and academics have found these technologies to be discriminatory, especially against disadvantaged groups. A recent and striking example is the case of Google Vision AI, Google's image labeling tool. During the COVID pandemic, when images of people holding a thermometer started to spread, it has been found to produce different results depending on skin color, correctly labeling the image as "electronic device" if there was a white person in it, while as "gun" if there was a black person in it. Image labeling is the basis of one of the technologies that has shown the most striking improvements, facial recognition. Since facial recognition technologies are an application of artificial intelligence and machine learning, they are not exempted from showing biases that in some cases have led to discriminatory results. The goal of this blog series is to explore the light and shadow of facial recognition through the investigation of some case studies in different fields.

A focus on facial recognition

Formally, facial or face recognition is defined as “the science which involves the understanding of how the faces are recognized by biological systems and how this can be emulated by computer systems” (Martinez 2009). It is a biometric technique to uniquely identify a person by comparing and analyzing patterns based on their "facial contours"; in other words, it is a method of identifying or confirming a person's identity from their face. Other types of biometric software include voice recognition, fingerprint recognition, and retinal or iris recognition. This technology is primarily used for security and law enforcement purposes, but it is becoming increasingly popular in other areas as well.

The pioneers of facial recognition took their first steps in the 1960s. In those years, although the recognition technique was supported by manual marking of facial points, it was demonstrated that biometric recognition was possible. The 1980s marked the entry of linear algebra as an application for solving facial recognition problems, laying the foundation for future development of the technology. The first commercial use of facial recognition was in the early 90s, during which the Face Recognition Technology (FERET) program was launched by the Defense Advanced Research Projects Agency (DARPA) and the National Institute of Standards and Technology (NIST); the project involved the collection of a database containing images of faces. Nowadays, this technology has undergone considerable development since its origin. In fact, facial recognition systems can be used to identify people in photos, videos, or in real time.

How it works?

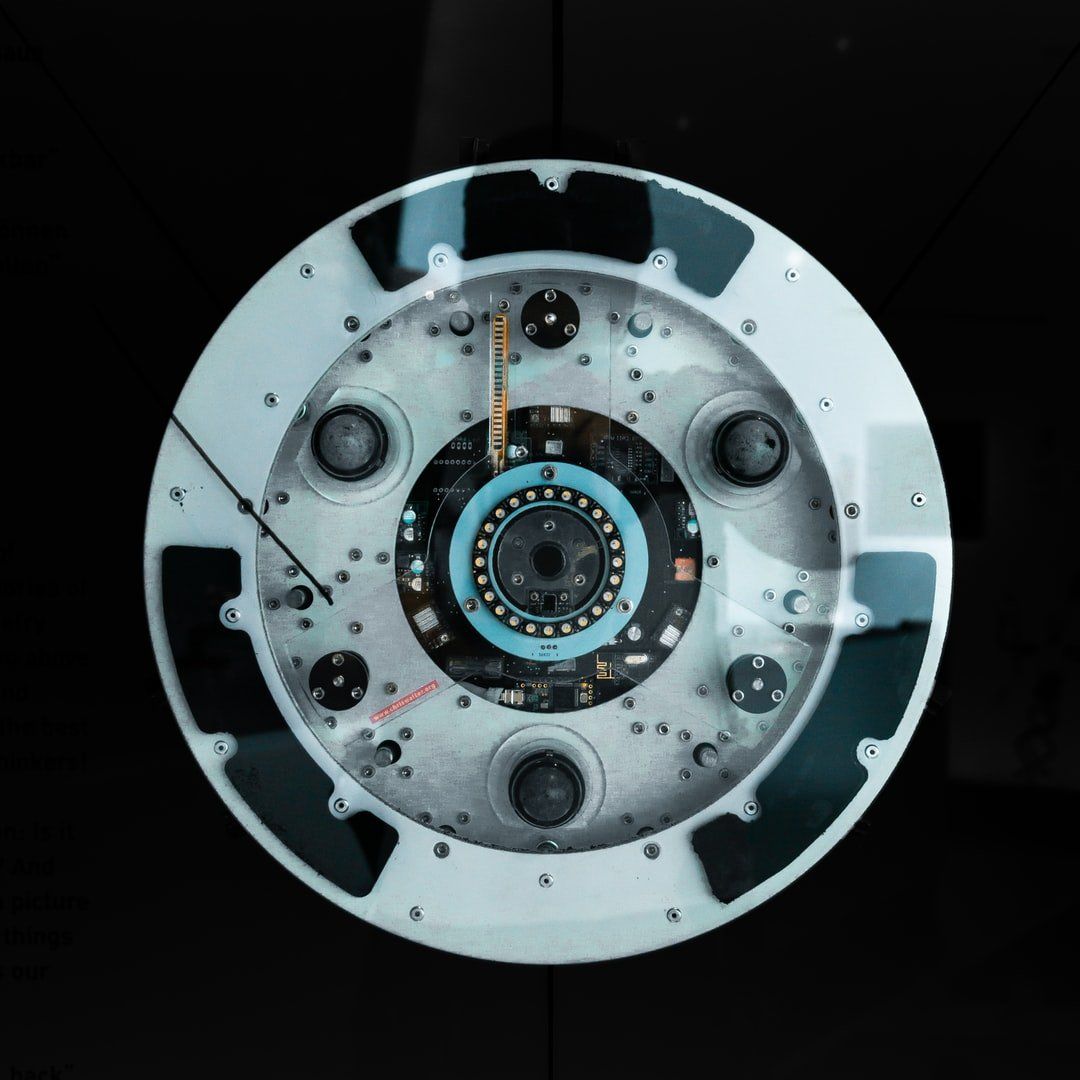

Many people are familiar with facial recognition technology thanks to Face ID, which is now widely used in the mobile market. Typically, facial recognition doesn't use a huge database of photos to determine a person's identity; it merely identifies and recognizes one person as the sole owner of the device, limiting access to others. Facial recognition systems are used in several domains today, but in general they follow this type of operation:

Step 1: Face Detection

The camera detects and locates an image of a face, either alone or in a crowd. The image may depict the person looking straight at the camera or in profile.

Step 2: Face Analysis

The face image is captured and analyzed. The software examines the geometry of the face and some key factors, which include variables such as the distance between the eyes, the depth of the eye sockets, the distance between the forehead and the chin, the shape of the cheekbones and the contour of the lips, eyes and chin. They aim to identify the main features of the face and are essential to distinguish it.

Step 3: Convert the image into data

The face capture process transforms the information about the face into a set of digital information. This set of information is stored in the form of a numeric code called a faceprint. A person's faceprint is as unique as their fingerprints.

Step 4: Finding a match

The subject's faceprint is compared to a database of known faces. For example, the FBI can access up to 650 million photos from various government databases. On Facebook, any photo tagged with a person's name is entered into Facebook's database, which can be used for facial recognition. If the facial fingerprint matches an image in a facial recognition database, identification is performed.

Opportunities and Risks

Facial recognition promises great benefits for individuals and the communities. Feedback from the law enforcement in the states where it is already present shows that great hope for improvement of policing lies in this technology. It should enable easy identification of suspicious persons thereby increasing the safety of the entire community. It provides great convenience to individual users. There might no longer need for long and complicated passwords or keywords one has to memorize. A one’s face would give it exclusive access to confidential contents. The technology would allow for automating matching between faces and persons, thereby saving time for people who are searching for persons online, whether they do it while tagging friends on a Facebook photo or doing a search via an online engine. The range of possible applications is great, and the popularity of this technology would certainly increase it even more.

But the great benefits do not come without great risks. The main concern lies in the potential of abuse in the public sector. An account of abuse of facial recognition by public authorities has already been recorded in China where it has been used for racial profiling and control of Uighur Muslims. China, however, is not the only state which employs facial recognition in the public sector. For instance, Russia, France, the UK, and Israel have either expressed interest in this idea or are already employing facial recognition for security purposes or law enforcement. The problem with the use of such suprême technology is that it enables a state to effectively surveil individuals. Described in the New York Times as « one of the most powerful surveillance tools ever made” , it enables a state to identify protestors, receive information about the movement of individuals, and track their every public appearance.

The potential of abuse exists as well in the private sector. The value of personal data in today’s digital economy has made possible the rise of some of the biggest and most influential companies in the history of the world. Modern multinational companies have enormous power due to the vast amounts of data in their control, backed up by suprême algorithms and world-class scientists and experts. Biometric data used in facial recognition, create a great range of potential ways to use this technology by the private companies, thereby undermining the data privacy of individuals.

Another risk born by facial recognition is its accuracy. In case it fails to match the face with the right person, it might have devastating consequences, especially in sensitive areas of application such as law enforcement. Although the technology is rapidly improving, it is still not completely trustworthy, especially in the real world where the conditions for face identification are not perfect. While the accuracy might not be that critical in the case of Instagram’s search engine, the bank transfers supported by facial technology need to be almost impeccable. Therefore, the lack of accuracy has different importance contingent on the field of application.

The inaccuracy might have various causes as well as create consequences of different gravity. The term algorithmic bias refers to types of inaccuracies that create unfair outcomes as a consequence of a systematic error in the technology or the ways it is used. There are many ways to render the technology inaccurate. One might feed it with the data that reflects the personal preferences of persons providing it, or the design of the algorithm might be erroneous. An existing record demonstrates a history of sexism, racism, and discrimination based on ethnicity connected to the use of facial recognition.

Although the benefits of facial recognition are undeniable, so are the risks. The potential magnitude of the risks has even made some of the lawmakers around the world consider banning the technology altogether. For instance, the US and the EU are currently considering what should be done to curb the risk. The potential measures range from a time-limited moratorium, a ban of the use of facial recognition in sensitive sectors such as law enforcement, to a complete ban.

This is an introductory blog post in the series on facial recognition by the Internet & Just Society. The topic deserves a more extensive insight that is contingent on the area where the technology is to be applied. For this reason, the following blog posts in this series will be devoted to facial recognition in specific sectors where its impact might be the most striking.

Bibliography

Martinez A.M. (2009) Face Recognition, Overview. In: Li S.Z., Jain A. (eds) Encyclopedia of Biometrics. Springer, Boston, MA. https://doi.org/10.1007/978-0-387-73003-5_84

Elena Beretta received the M.Sc. in Economics and Statistics from University of Turin in September 2016 by working on an experimental thesis investigating the diffusion of innovation by agent-based models. She earned a second level Master degree in Data Science for Complex Economic Systems at the Collegio Carlo Alberto in Moncalieri (TO), in June 2017.

From April 2017 to September 2017 she got involved in an internship at DESPINA - Laboratory on Big Data Analytics at the Department of Economics and Statistics of the University Study of Turin – working on the NoVELOG project ("New Cooperative Business Models and Guidance for Sustainable City Logistics"). In November 2017 she’s starting to collaborate as PhD student, and effective member, with Nexa Center for Internet & Society at Politecnico of Turin and with Fondazione Bruno Kessler (Trento), by working on a project on Data and Algorithms Ethics. Her current research focuses on improving the impact of automatic decision-making systems on society through the implementation of models involving data on human behavior. Specific fields of interest include data science, machine learning, recommendation systems and computational social sciences.

Nasir Muftić is a Ph.D. student at the Faculty of Law of the University of Sarajevo. His research interests include legal regulation of artificial intelligence with a focus on liabilitiy. He graduated from the Faculty of Law of the University of Sarajevo in 2016 and obtained an LL.M. degree in International

Business Law from the Central European University in 2017. He completed traineeships at the Constitutional Court of Federation of Bosnia and Herzegovina and the Supreme Court of Federation of Bosnia and Herzegovina. Prior to the commencement of his doctoral studies, Nasir worked as a legal assistant at BH Telecom JSC in fields of media and telecommunications law, civil litigation, intellectual property law, commercial contract drafting, and regulatory compliance.

Read More

Watch Our Episodes